| ||

Depending on the type and variation in training data, machine learning can be roughly categorized into three frameworks: supervised learning, unsupervised learning, and reinforcement learning. Multiple instance learning (MIL) falls under the supervised learning framework, where every training instance has a label, either discrete or real valued. MIL deals with problems with incomplete knowledge of labels in training sets. More precisely, in multiple-instance learning, the training set consists of labeled “bags”, each of which is a collection of unlabeled instances. A bag is positively labeled if at least one instance in it is positive, and is negatively labeled if all instances in it are negative. The goal of the MIL is to predict the labels of new, unseen bags.

Contents

- Visual tracking based on distribution fields and online weighted multiple instance learning

- Background

- Definitions

- Assumptions

- Standard assumption

- Presence threshold and count based assumptions

- GMIL assumption

- Collective assumption

- Algorithms

- Instance based algorithms

- Iterated discrimination

- Diverse Density

- Metadata based or embedding based algorithms

- Generalizations

- References

Convenient and simple example for MIL was given in. Imagine several people, and each of them has a key chain that contains few keys. Some of these people are able to enter a certain room, and some aren’t. The task is then to predict whether a certain key or a certain key chain can get you into that room. To solve this problem we need to find the exact key that is common for all the “positive” key chains. If we can correctly identify this key, we can also correctly classify an entire key chain - positive if it contains the required key, or negative if it doesn’t.

Visual tracking based on distribution fields and online weighted multiple instance learning

Background

Keeler et al., in his work in early 1990s was the first one to explore the area of MIL. The actual term multi-instance learning was introduced in the middle of the 1990s, by Dietterich et al. while they were investigating the problem of drug activity prediction. They tried to create a learning systems that could predict whether new molecule was qualified to make some drug, or not, through analyzing a collection of known molecules. Molecules can have many alternative low-energy states, but only one, or some of them, are qualified to make a drug. The problem arose because scientists could only determine if molecule is qualified, or not, but they couldn’t say exactly which of its low-energy shapes are responsible for that.

One of the proposed ways to solve this problem was to use supervised learning, and regard all the low-energy shapes of the qualified molecule as positive training instances, while all of the low-energy shapes of unqualified molecules as negative instances. Dietterich et al. showed that such method would have a high false positive noise, from all low-energy shapes that are mislabeled as positive, and thus wasn’t really useful. Their approach was to regard each molecule as a labeled bag, and all the alternative low-energy shapes of that molecule as instances in the bag, without individual labels. Thus formulating multiple-instance learning.

Solution to the multiple instance learning problem that Dietterich et al. proposed is three axis-parallel rectangle (APR) algorithm. It attempts to search for appropriate axis-parallel rectangles constructed by the conjunction of the features. They tested the algorithm on Musk dataset, which is a concrete test data of drug activity prediction and the most popularly used benchmark in multiple-instance learning. APR algorithm achieved the best result, but it should be noted that APR was designed with Musk data in mind.

Problem of multi-instance learning is not unique to drug finding. In 1998, Maron and Ratan found another application of multiple instance learning to scene classification in machine vision, and devised Diverse Density framework. Given an image, an instance is taken to be one or more fixed-size subimages, and the bag of instances is taken to be the entire image. An image is labeled positive if it contains the target scene - a waterfall, for example - and negative otherwise. Multiple instance learning can be used to learn the properties of the subimages which characterize the target scene. From there on, these frameworks have been applied to a wide spectrum of applications, ranging from image concept learning and text categorization, to stock market prediction.

Definitions

If the space of instances is

Assumptions

Most of the work on Multiple instance learning, including Dietterich et al. (1997) and Maron & Lozano-P´erez (1997) early papers, make the assumption regarding the relationship between the instances within a bag and the class label of the bag. Because of its importance, that assumption is often called standard MI assumption.

Standard assumption

The standard assumption takes each instance

Standard assumption might be viewed as too strict, and therefore in the recent years, researchers tried to relax that position, which gave rise to other more loose assumptions. Reason for this is the belief that standard MI assumption is appropriate for the Musk dataset, but since MLI can be applied to numerous other problems, some different assumptions could probably be more appropriate. Guided by that idea, Weidmann formulated a hierarchy of generalized instance-based assumptions for MIL. It consists of the standard MI assumption and three types of generalized MI assumptions, each more general than the last,

Presence-, threshold-, and count-based assumptions

The presence-based assumption is a generalization of the standard assumption, wherein a bag must contain one or more instances that belong to a set of required instance-level concepts in order to be labeled positive. Formally, let

A further generalization comes with the threshold-based assumption, where each required instance-level concept must occur not only once in a bag, but some minimum (threshold) number of times in order for the bag to be labeled positive. With the notation above, to each required instance-level concept

The count-based assumption is a final generalization which enforces both lower and upper bounds for the number of times a required concept can occur in a positively labeled bag. Each required instance-level concept

GMIL assumption

Scott, Zhang, and Brown (2005) describe another generalization of the standard model, which they call "generalized multiple instance learning" (GMIL). The GMIL assumption specifies a set of required instances

Collective assumption

In contrast to the previous assumptions where the bags were viewed as fixed, the collective assumption views a bag

Since

While the collective assumption weights every instance with equal importance, Foulds extended the collective assumption to incorporate instance weights. The weighted collective assumption is then that

Algorithms

There are two major flavors of algorithms for Multiple Instance Learning: instance-based and metadata-based,or embedding-based algorithms. The term "instance-based" denotes that the algorithm attempts to find a set of representative instances based on an MI assumption and classify future bags from these representatives. By contrast, metadata-based algorithms make no assumptions about the relationship between instances and bag labels, and instead try to extract instance-independent information (or metadata) about the bags in order to learn the concept. For a survey of some of the modern MI algorithms see Foulds and Frank

Instance-based algorithms

The earliest proposed MI algorithms were a set of "iterated-discrimination" algorithms developed by Dietterich et. al, and Diverse Density developed by Maron and Lozano-Pérez. Both of these algorithms operated under the standard assumption.

Iterated-discrimination

Broadly, all of the iterated-discrimination algorithms consist of two phases. The first phase is to grow an axis parallel rectangle (APR) which contains at least one instance from each positive bag and no instances from any negative bags. This is done iteratively: starting from a random instance

After the first phase, the APR is thought to tightly contain only the representative attributes. The second phase expands this tight APR as follows: a Gaussian distribution is centered at each attribute and a looser APR is drawn such that positive instances will fall outside the tight APR with fixed probability. Though iterated discrimination techniques work well with the standard assumption, they do not generalize well to other MI assumptions.

Diverse Density

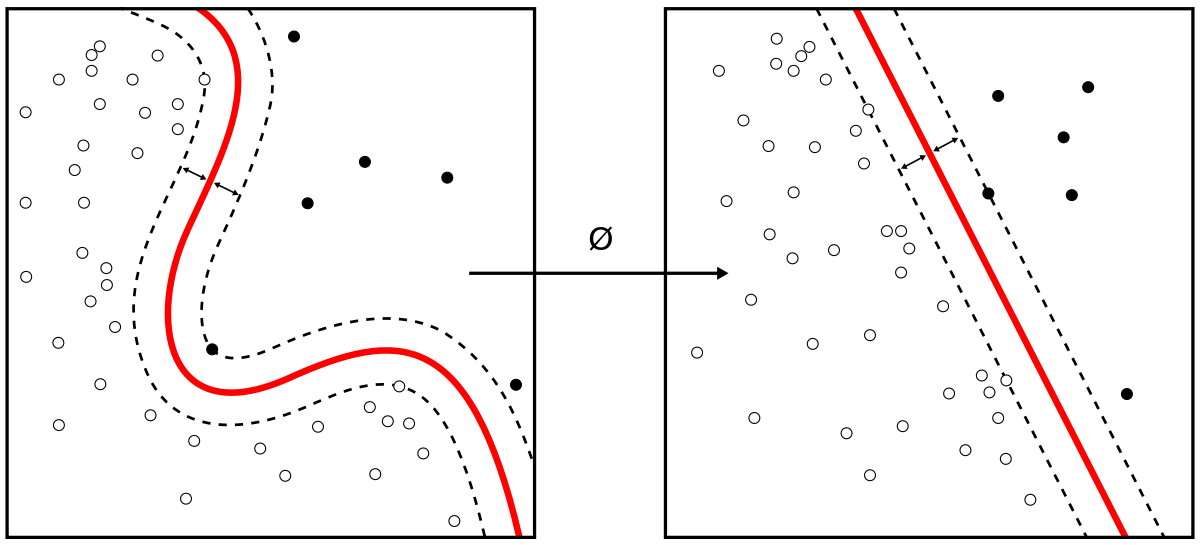

In its simplest form, Diverse Density (DD) assumes a single representative instance

Let

A number of single-instance algorithms have also been adapted to a multiple-instance context under the standard assumption, including

Post 2000, there was a movement away from the standard assumption and the development of algorithms designed to tackle the more general assumptions listed above.

Because of the high dimensionality of the new feature space and the cost of explicitly enumerating all APRs of the original instance space, GMIL-1 is inefficient both in terms of computation and memory. GMIL-2 was developed as a refinement of GMIL-1 in an effort to improve efficiency. GMIL-2 pre-processes the instances to find a set of candidate representative instances. GMIL-2 then maps each bag to a Boolean vector, as in GMIL-1, but only considers APRs corresponding to unique subsets of the candidate representative instances. This significantly reduces the memory and computational requirements.

Metadata-based (or embedding-based) algorithms

By mapping each bag to a feature vector of metadata, metadata-based algorithms allow the flexibility of using an arbitrary single-instance algorithm to perform the actual classification task. Future bags are simply mapped (embedded) into the feature space of metadata and labeled by the chosen classifier. Therefore, much of the focus for metadata-based algorithms is on what features or what type of embedding leads to effective classification. Note that some of the previously mentioned algorithms, such as TLC and GMIL could be considered metadata-based.

They define two variations of kNN, Bayesian-kNN and citation-kNN, as adaptations of the traditional nearest-neighbor problem to the multiple-instance setting.

Generalizations

So far this article has considered multiple instance learning exclusively in the context of binary classifiers. However, the generalizations of single-instance binary classifiers can carry over to the multiple-instance case.